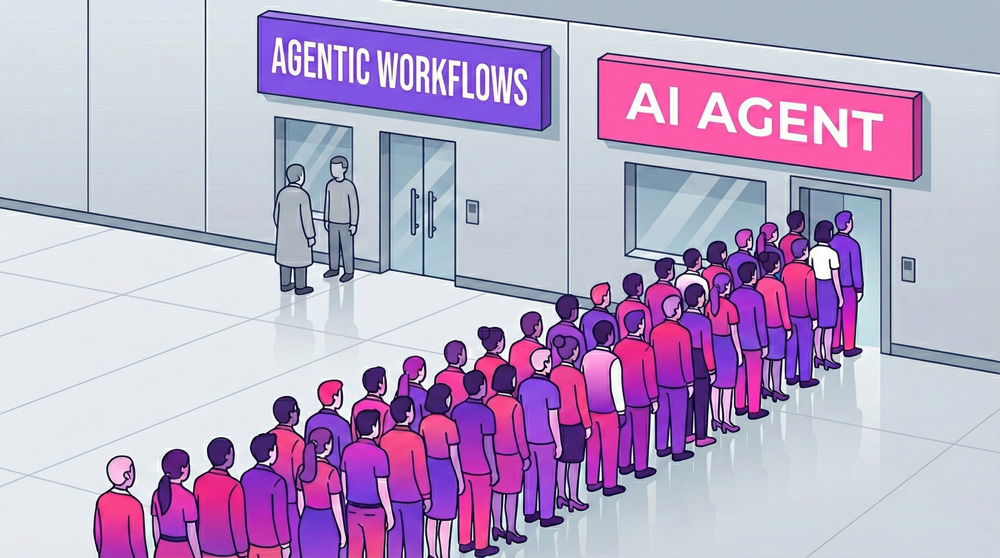

if you’ve scrolled through technical Twitter or LinkedIn recently, you’ve likely seen a variation of the cartoon illustrated above: a long, winding queue of people waiting for the "AI Agent" counter, while the "Agentic Workflow - Automation" counter next door sits virtually empty.

This image is funny because it’s true. It captures the exact psychological state of the tech industry in 2025. We are all lining up for the magic of Agents - digital workers that can autonomously plan, reason, and execute - while ignoring the unsexy reliability of Automation.

But here is the uncomfortable truth that connects this image to the 90% failure rate of AI projects: The "Automation" line is where the actual business value is being generated. And ironically, if you want to build a defensible AI company that doesn't get crushed by OpenAI or Google, you need to get out of the "Agent" line and start building "Agentic Workflows."

The Reliability Gap

Why is the "AI Agent" line so long? Because it promises zero marginal effort. The dream is that you give an LLM a vague goal ("Increase sales," "Fix the bugs"), and it magically figures out the rest. It’s the promise of labor replacement without management.

The "Agentic Workflow - Automation" line is empty because it implies work. Automation requires you to define the rules, map the edge cases, and build the guardrails. It’s deterministic. It’s boring.

But as our deep-dive research into AI failure rates shows, 90% of autonomous agents fail in production. Why? Because businesses run on reliability, not probability. A hallucinating agent that refunds the wrong customer is a liability, not an asset. The "Automation" counter delivers 99.9% reliability; the "Agent" counter delivers 80% reliability on a good day.

The Trap of the "Wrapper"

For the past two years, many founders joined the "AI Agent" queue by building Wrappers—thin user interfaces on top of GPT-4 or Claude.

The case of Perplexity AI serves as a cautionary tale here. Perplexity built a beautiful product that wrapped search engines and LLMs to give answers, not links. It reached $100M in revenue. But as we analyzed, it is now fighting a multi-front war against:

-

Its Suppliers: OpenAI launched SearchGPT.

-

Its Distribution: Google launched AI Overviews.

-

Its Content Source: Publishers like Dow Jones are suing for copyright infringement.

Because Perplexity’s core value was largely an interface innovation (a "wrapper" around search and reasoning), it was vulnerable. The "moat" was shallow. When the underlying models got better at search, the wrapper's value proposition eroded.

The Real Moat: Agentic Workflows (Systems of Action)

So, how do you protect yourself? How do you build something that isn't just a wrapper and doesn't fail like a rogue agent?

You combine the two queues. You build Agentic Workflows.

An Agentic Workflow is what happens when you take the reasoning power of an LLM and constrain it within the rigid, reliable guardrails of Automation. Instead of letting the AI "freestyle" a solution, you engineer a pipeline where the AI acts as a router or a processor within a deterministic system.

Here is why this architecture is incredibly hard to copy (and thus, defensible):

1. The State Management Moat

Simple agents are stateless; they react to the last message. Enterprise workflows are stateful. They need to remember that a contract was sent on Tuesday, viewed on Wednesday, and needs a follow-up on Friday. Building the State Machine that orchestrates this persistence - handling API timeouts, human approvals, and long-running processes - is a massive engineering challenge. Competitors can copy your prompt, but they can't copy your state machine.

2. The Evaluation ("Eval") Moat

How do you know your legal AI won't hallucinate a precedent? You test it against a Golden Dataset - thousands of expert-verified examples. Companies like Harvey (Legal) and Abridge (Healthcare) have spent years compiling these proprietary test sets. This allows them to "freeze" expert tacit knowledge into their system. A clone might look like Harvey, but without the eval set, it will be too dangerous to deploy.

3. The Integration Moat

Wrappers chat; Systems of Action do. defensible agents don't just give advice; they write directly into the database (System of Record).

-

Abridge doesn't just transcribe; it writes directly into the Epic EHR system, saving doctors hours.

-

Sierra doesn't just chat; it processes returns directly in the Shopify backend. Once an agent is integrated into a company's core "System of Record," ripping it out becomes incredibly painful.

Conclusion: Choose the Empty Line

The image from the start of this post is a map of opportunity. The crowded "AI Agent" line is full of tourists and wrappers. The empty "Agentic Workflow - Automation" line is where the builders are.

To survive the next phase of AI, stop trying to build a magic generalist agent. Start building a boring, reliable, stateful System of Action. Use AI to handle the messy, unstructured reasoning, but use rigid automation to handle the process, the state, and the outcome.

That’s how you turn a probabilistic toy into a defensible business.